During my professional career, I have always worked with data warehouse solutions of high maturity and of strategic importance to the company. The fundamental part of these solutions was always the core layer, where all the data is integrated in the same model to produce a single source of truth for further usage. This part of data transformation is typically the most expensive to develop and the most complicated to explain to the project sponsor. Nowadays I see a lot of tools that offer almost immediate usage of loaded data without the need for a core layer, and design patterns that support this approach. Does this mean that the “classical” 3 level architecture with a core layer is obsolete and cannot compete against the latest design patterns? I hope not, as I am rather conservative with regards to architecture, but let’s try to support this opinion with some facts.

At the outset, I should mention that my experience with data warehouse has been limited mainly to solutions used in the financial sector. This might lend a slight bias to my point of view, as this industry has some specificities – for instance regulatory requirements. This typically leads to higher requirements for data quality, consistency and auditability. Another important fact is that all these companies have been in business for a significant amount of time. Therefore, since their solutions’ design was put in place some ten years ago with the core layer, it is not likely that the company will remove the core or start anew, building a DWH solution from scratch. Firstly, it is hard to justify such costs when the solution still provides the needed services. Secondly, you do not want to lose the data and its continuity, so a major change would necessitate launching a migration project to support the move to a new solution pattern. And thirdly, there will be a strong sentiment in the team in favor of the old solution – you might need new skills, different team setup and modified processes.

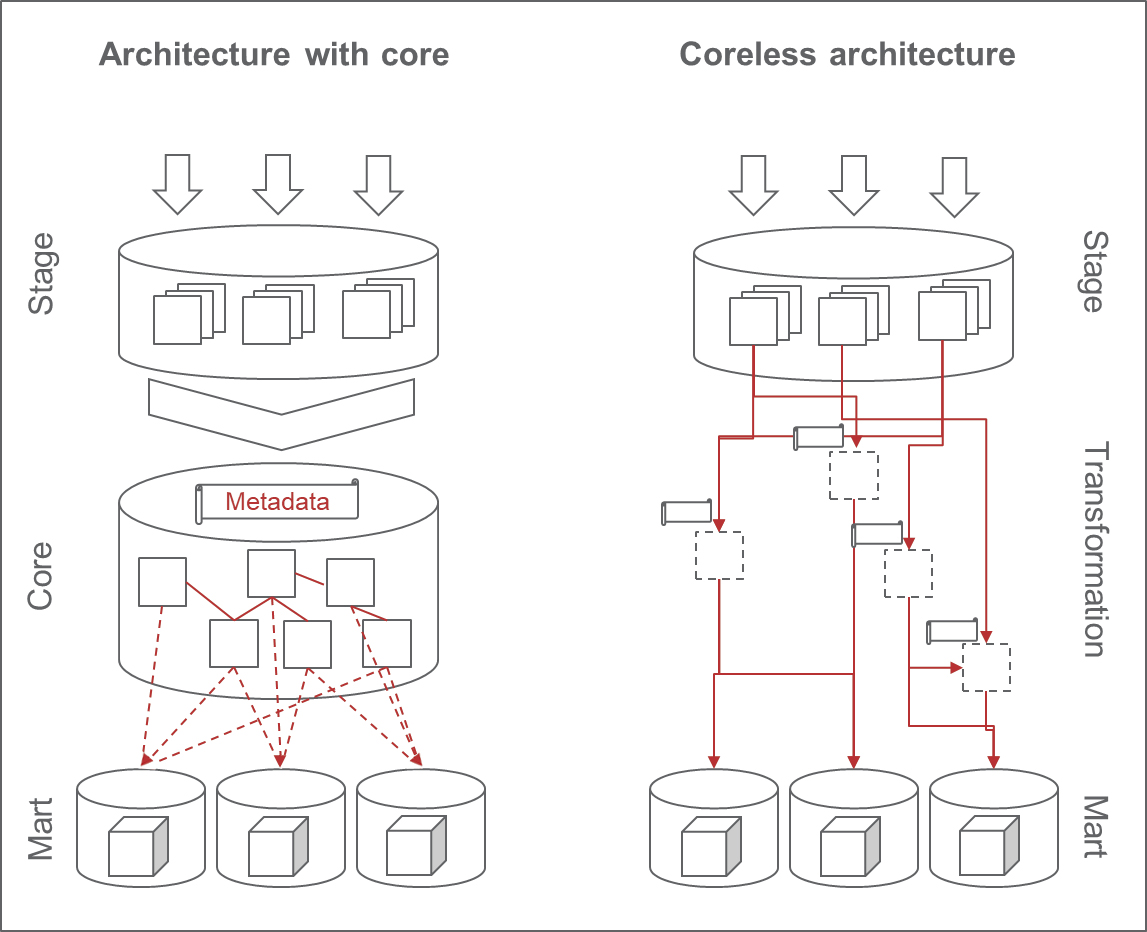

So why bother with the core layer at all? In theory, the best moment to use the core is typically in a situation when you need to combine data from different sources to support more than one solution. As long as there is only one output, you can still manage all the transformations and combinations from the sources at the level of this output, and you can avoid using a preparation layer. The same applies when you have only one source system that feeds data into the solution – you can manage the transformation at the output level. However, as soon as you have multiple inputs and outputs, the solution starts becoming more difficult to manage.

This is the point in the traditional approach where the core layer comes in handy. Let us presume that we have two sources providing data on clients. The core layer with dedicated structures to accommodate client data is designed and transformation is created. Checks and controls are put in place and subsequent solutions can easily refer to this neat, new structure to get data about clients. Besides combining data from multiple source systems, additional data is added on this level as well. The applied data model motivates the derivation of business oriented data – for instance application of client segmentation. This approach in some cases also leads to the loss of metadata at the row level. A row is distributed among several relational tables that contain the complete information on the client, but it is not known which source system this data was retrieved from. The core layer behaves like the ultimate source system – the only source of truth for the further usage of the data.

It is also fair to mention that the presence of the core layer sends a strong signal to regulators with regards to data management and data quality processes. It is very difficult to build a functioning core layer without addressing topics like data lineage or data quality. So, when there is a core layer, it is easier to persuade a regulator that the company really cares about data quality and other topics of interest to the governing bodies. It is easy to show the data flow and point out how the reports are built and more importantly, the presence of the core layer suggests that all the subsequent solutions are using the same data.

Some of the latest solutions suggest that you do not need the core layer to prepare the data. You simply combine the data you need for the given business solution you are currently supporting. If by chance you need the same data again for some other use case, you can either recycle the existing solution, or duplicate it with some minor adjustments. Combination of data from different sources is managed individually for every use case and does not require the core layer. Does this approach make sense? Is it sustainable? And how come that good old core layer is no longer needed in these patterns?

Organization, technology and business circumstances have evolved significantly in the past years. Organizations have started to embrace agile methodology (even if with some adjustments), which typically leads towards decentralization of the data governance and usage. Adoption of cloud based technologies has reduced the importance of data deduplication or performance optimization. Machine learning combined with data science has also reduced the effort related to data structure design and governance. Most markets experienced important changes in the past years, influencing their strategy – change is an inevitable part of business processes and it must be supported (including by data solutions). To cut it short – if there are now ten independent teams with E2E responsibility with regards to the delivery instead of one centralized team, the core layer might look more like a liability or a bottle neck, than an advantage. As soon as you do not have to worry about performance, since the HW costs have decreased thanks to cloud based infrastructure, the duplicity of transformations or data structures is no longer regarded as a critical issue. When you need a modular system to support quickly changing business demands, development of a stable core might seem inefficient in the short run. But does it all mean that the days of the core layer are over?

My answer is no, or at least not for now. Core layer architecture is a very simple design. It requires some management on a central level to ensure the core is consistent and stable, but it provides a good quality service. I do agree that some aspects of the core layer architecture must change to keep up with the environment (e.g. parallel processing) and as previously mentioned, it also brings a certain level of rigidity. But in exchange, it offers a single source of truth to all solutions, which is easy to understand. On the other hand, the “stage – mart” solution can work as well, thanks to all the changes we have discussed. But to make it sustainable and easy to use, there must be a reliable metadata solution to support it. What I mean is that if every report or output can be supported by an individual set of data compiled from different sources, there must be a metadata solution behind it that will allow users to search for the solution parts they might reuse or need for their own solution. Users need a way to search for the data they need. Instead of looking for a table named “Client” in the core, there must be an efficient way to collect all tables from all the systems with a client label. There are solutions that support such usage, even with automated data classification and machine learning modules, but it must be put in place together with the coreless solution to support the users. Therefore, I believe that it is not that simple to remove the core and tell your users to get used to it, but there needs to be a metadata solution to support and assist these users.

I must add I agree that even core based designs deserve metadata solution support, but I get the impression that the need for a metadata solution is much more crucial in coreless architecture. So, in the end it might be easier for some companies to persuade the investors to develop a core layer, rather than a robust metadata solution to support the users.

I would like to follow this train of thought in the next article on the topic of how to replace the core layer with metadata (and whether it is worth it).

Author: Tomáš Rezek

Information Management Leader