Digital transformation is a cryptic concept under which different people imagine different things. Most would agree that digital transformation is associated with new technologies and their use for new types of services, products and processes.

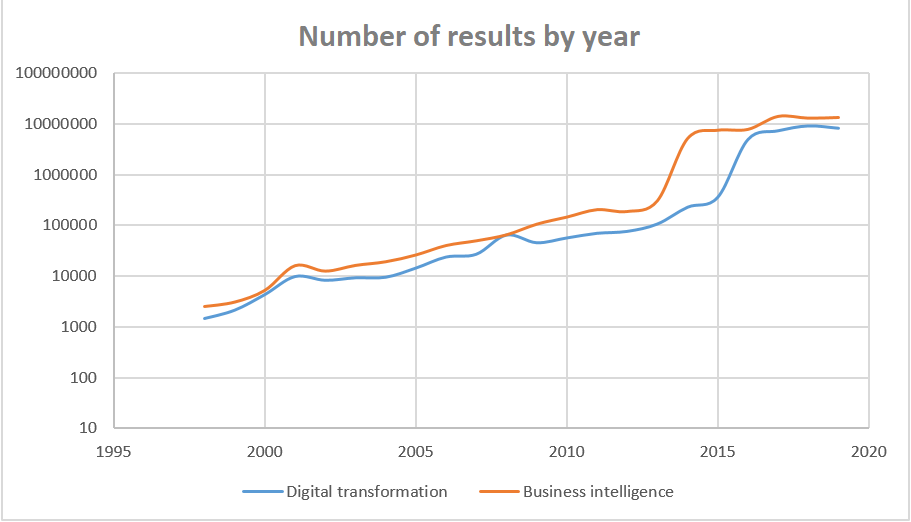

The term digital transformation has a long history. Google lists 1,450 search results for this term from the year 1998. That is the year Google was founded as an iconic example of digital transformation. Google returns 4,320 results for the year 2000 and 56,800 results for 2010. And for 2018, it returns 9,300,000 such results, which is 1,840,000 more than it returns for 2017.

One can expect that the story of the impact of digital transformation on IT will be similar to that of business intelligence (BI), just one or two decades later. It took more than ten years for people to notice what business intelligence had to offer and what it meant for data management.

Figure 1: The number of search results that Google returns for individual years for the terms “digital transformation” and “business intelligence”. Digital transformation copies business intelligence almost exactly, just delayed by a few years.

Regarding data architecture, business intelligence has brought a clear requirement for uniform data integration throughout the entire organization. This requirement has led to the creation of data warehouses with data models oriented towards the business domains of the organization or towards creating a group of specifically-oriented operational data warehouses for specific business requirements. In terms of data competencies and services, business intelligence has led to the development of data quality, data integration and master data management. From the business management perspective, it has allowed every decision to be supported by the necessary up-to-date data in user-friendly reports. From the data management perspective, it has proved the importance of metadata as well as its processing and analysis. And finally, from the technological perspective, it has brought ETL processes, data servers enabling efficient queries on large volumes of data and things like OLAP databases—solutions for predefined analyses.

It is not clear what impact digital transformation will have on IT. However, its influence on data architecture is already beginning to take shape. It turns out that new types of services and products are built on the processing of large amounts of data from many sources, which is unmanageable in traditional data warehouses built on relational databases. Relational databases are both optimized for processing SQL queries—not complex calculations of predictive algorithms, text analysis or voice and image recognition—and are very expensive as data storage for large amounts of data. Furthermore, it is not only the cost of storage but also the cost of data warehousing processes that require accurate data descriptions, high-quality data and the ability to integrate with other domains in the data warehouse.

Digital transformation is associated with new types of analysis, the use of artificial intelligence, new types of communication with customers and new types of products directly based on customer data.

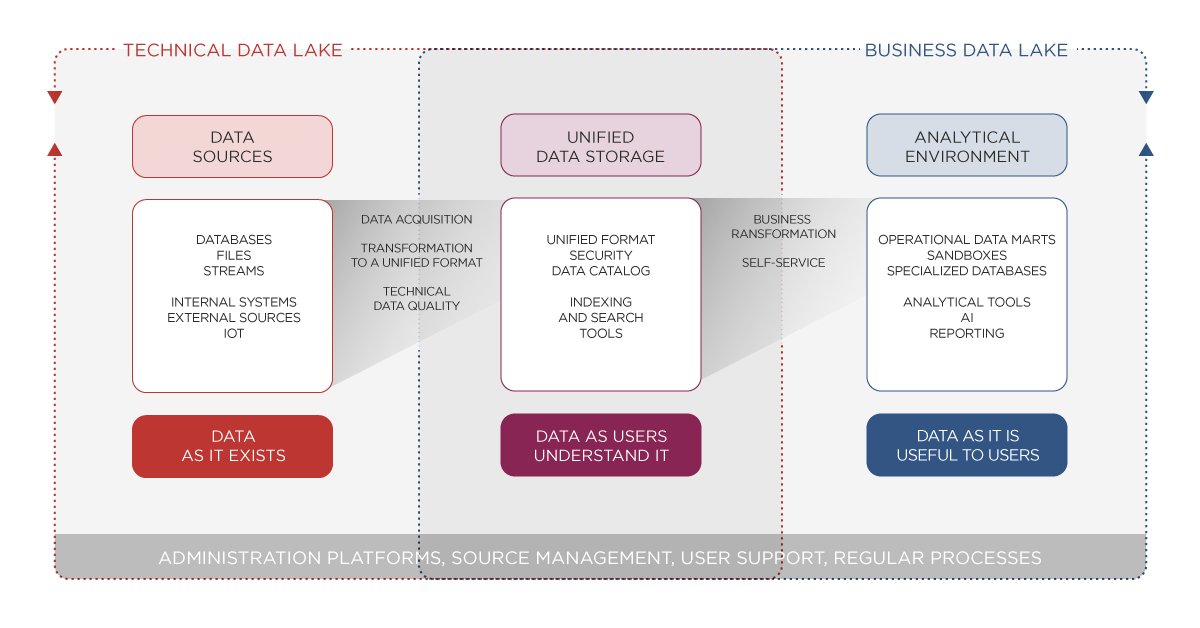

So, it requires a new approach to storing and processing data in the form of cheaper data storage with scalable computing power capabilities—data lakes. The architecture of a typical data lake is shown below in figure 2.

Figure 2: The architecture of a typical data lake

But just as the acquisition of a powerful server alone does not address the requirements of BI, so the acquisition of a data lake environment alone does not address the requirements of digital transformation. For a data lake to have real business benefits, the necessary functions and competencies need to be created and developed. Here is a list of those which I consider the most important.

- Ability to quickly ingest data into the data lake. Adding new data files must be technically and procedurally quick and easy, whether it be a regular data load, a one-time upload or the connection of a data stream.

- Storing data together with its structure. Data formats such as AVRO and PARQUET store the data structure together with the data and allow the storage of structures more complex than just relational tables.

- Ability to search data. It is important that the data search is not based only on a data catalog. Modern technology allows data to be indexed in such a way that it is possible to answer questions such as “Where is information about product KP12345 located in the data lake?” or “Where are the telephone numbers?”

- Ability to use a variety of computing components. As the number of users working with data increases, so does the number of data processing tools that users require. A data lake must have the ability to connect to not only standard reporting tools but also various tools for artificial intelligence and machine learning. This is often associated with the need to transfer data to special storage for these systems.

- Sandboxing and self-service are proving to be critical features of data lake solutions. More and more users are able to work with data independently and create their own solutions. And if a data lake offers the features they need, they are happy to skip the stage of precisely specifying requirements and waiting for them to be implemented.

Author: Ondřej Zýka

Information Management Principal Consultant